Connecting People in a Distanced World

Snap’s Bitmoji. Apple’s Memoji. Giphy’s Stickers. When it comes to ease of use, few platforms have pulled off personalized user animation, and none has unlocked the potential for a completely motion-synced, 3D re-creation of a human form in real time. Until now.

For years, USC Viterbi graduate computer science researchers, including Ruilong Li, Kyle Olszewski, Yuliang Xiu, Shunsuke Saito and Zeng Huang, have created technologies that use artificial intelligence to capture full-body images of humans and re-create their virtual avatar in real time. Not only can your avatar look like you and mimic your facial expressions, but it can walk like you, dance like you, shave your beard like you — because in essence, it is you.

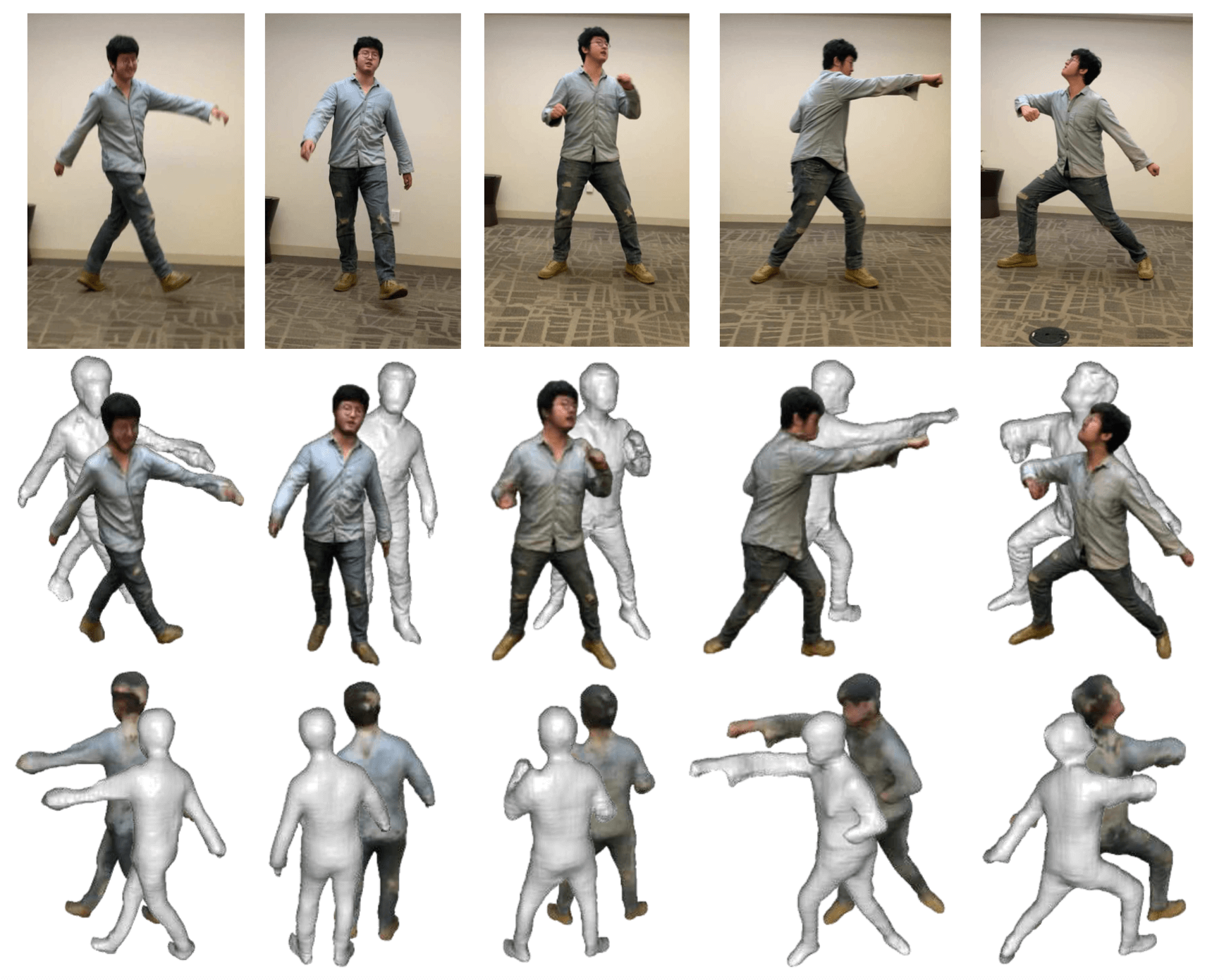

The team — advised by former USC Viterbi computer science professor Hao Li — competed at Real-Time Live! at the SIGGRAPH 2020 conference, which features the top original, interactive, real-time project demos of the year. On Aug. 25, they snagged, in a two-way tie, the Jury’s Choice Best in Show award for their technology called Volumetric Human Teleportation (VHT), the first system capable of capturing a completely clothed human body, including the back, in real time using a traditional phone camera or computer webcam.

“Getting the chance to present at Real-Team Live! was an honor already,” said Ruilong Li, a first-year computer science Ph.D. candidate. “We couldn‘t have imagined we‘d win Best in Show.”

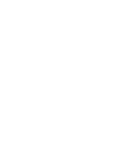

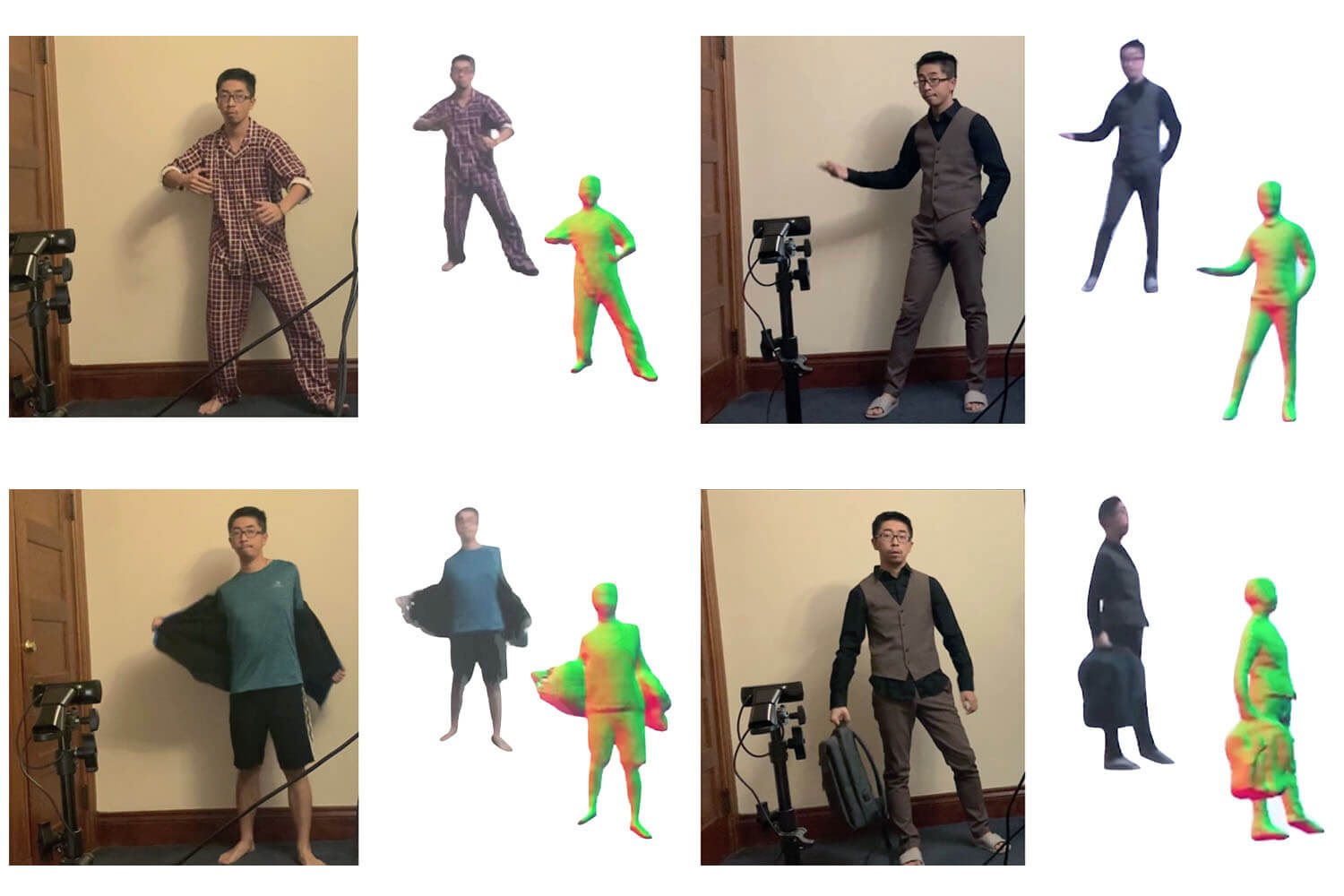

VHT showcases a mix of advanced computer graphics, interactive techniques and brilliant design. At its core, VHT is a computer, a camera and two GPUs that together capture a 2-D video stream of the human body, the way a webcam works. Simply put, VHT can take a full body scan and produce a human avatar with the click of a button. Yes, it is that easy.

What differentiates the technology from other video-capturing systems is its volumetric real-time capabilities. From the video stream, VHT uses a deep-learning learning algorithm to produce a clay-like, 3-D model of the human form that follows the person’s movements with little to no delay. What makes the technology even more unique is its keen sense for detail.

Huang and Olszewski, who both recently earned their Ph.D.s from USC Viterbi, were shocked when they discovered the extended capabilities of their work.

“One of the advantages of our approach is it doesn‘t just rely on a template of a human body to render a model,” Olszewski said. “Because of that, it senses unusual figures and shapes like backpacks, glasses, the outline of a dress, and they all move with the person.”

VHT puts the power of 3-D representation in the hands of a cell phone user. Ditch your controller; imagine navigating Azeroth with your actual footsteps. Or, what if your to-scale avatar could try on clothes for you before you buy them? Now that is online shopping.

Even further, VHT technology could be used to re-create a virtual college campus where student avatars could interact with their peers and professors, or to capture movement at a live virtual concert. Being “present” in a real-time virtual environment allows people to feel more connected to the people and things they care about in a socially distant age. The vision for the project is to connect people who are apart by simulating how they would interact if they were actually together.

The team received funding from numerous sources, including the Office of Naval Research and the Semiconductor Research Branch of the Defense Advanced Research Projects Agency.

Team adviser Hao Li‘s professorship at USC Viterbi ends with a sweet victory as he says goodbye to academia to fully invest in his startup business, Pinscreen. “I‘m extremely proud of my career and my achievements,” he said. “Even more, I‘m astonished by the students I got to work alongside and mentor.”

As for his students? Huang and Olszewski have both started as full-time software engineers at Snap. Saito, another graduating member of the team, plans to join Facebook as an engineer. Ruilong Li is eager to continue developing groundbreaking technologies at the USC Institute for Creative Technologies.

“Seeing our hard work pay off was the coolest thing on the planet,” Ruilong Li said.