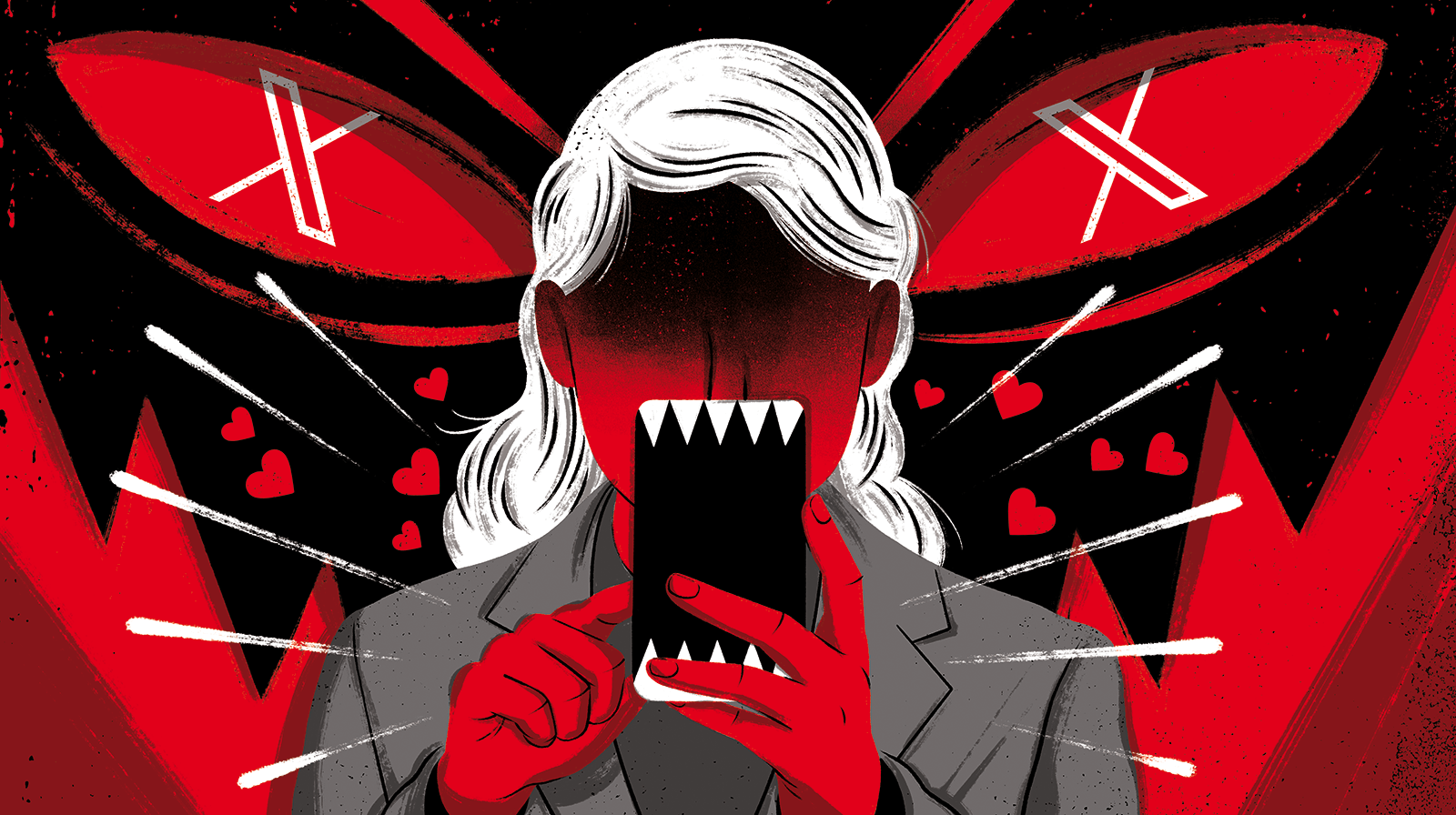

Tweeting Hate for Love

Online hate speech and conspiracy theories can have deadly consequences.

Dylann Roof, the white supremacist who murdered nine Black congregants in a Charleston, S.C., church in 2015, was radicalized online. So, too, was Robert Bowers, the killer of 11 Jews at the Tree of Life synagogue in Pittsburgh in 2018. As was Payton Gendron, who gunned down 10 Black shoppers in a Buffalo, N.Y., supermarket massacre in 2022.

“We can draw a direct line from people’s exposure to hateful speech online and hateful actions and violence in the real world,” said Megan Squire, deputy director for data analytics at the Southern Poverty Law Center.

According to the Pew Research Center, 41% of Americans have personally experienced online harassment. Transphobia, antisemitism and racism, including xenophobia, are among the most common forms of hate speech on social media, said Keith Burghardt, a computer scientist at the USC Information Sciences Institute.

So, what motivates the haters? More than a desire to emotionally harm their targets, they seek online “validation” in the form of likes, retweets and approving comments, said Julie Jiang, a recent Ph.D. computer science graduate who studied under Professor Emilio Ferrara.

“They want the love. That’s why they’re being hateful,” said Jiang, the lead author of the recent academic paper, “Social Approval and Network Homophily as Motivators of Online Toxicity.”

Jiang and her colleagues examined more than 1 million tweets posted by about 3,000 anti-immigrant users of X, formerly known as Twitter. They built a predictive machine learning model that considered the toxicity of a tweet and the number of likes a user’s post typically received, among other variables. Their conclusion: “The audience for an author’s hate messages [that appear nominally focused on some target minority] is like-minded online peers and friends, whose signals of approval reinforce and encourage more extreme hatred in an author’s subsequent messages.”

Jiang and her team’s study — the first to quantify whether hate speech can be a socially motivated behavior — was limited to X. However, she believes their findings could be extrapolated to other social media platforms. Additionally, Jiang said the positive feedback someone receives for becoming ever more hateful online could inspire others in their network to go darker themselves.

Perhaps most importantly, Jiang, a recent Forbes “30 Under 30” honoree, hopes the research might inspire social media companies to “change how they potentially can moderate hate speech in the future.” One idea: Disable likes for hateful tweets, depriving the nasty posters of attention — and love.