‘You Are an AI.’ ‘Yes, and I Also Do Improv Comedy.’

What would conversations with Alexa be like if she were a regular at The Second City?

Jonathan May, research lead at the USC Information Sciences Institute (ISI), is exploring this question with Justin Cho, an ISI programmer analyst, through their Selected Pairs Of Learnable ImprovisatioN, or SPOLIN, project. Their research incorporates improv dialogue into chatbots to produce more engaging interactions.

An chatbot is essentially a computer brain that you can interact with through voice or text. Utilizing Artificial Intelligence (AI), chatbots can serve as a customer service rep on commerce websites, helping you navigate your transaction, or act as a virtual assistant like Amazon Alexa, Google Assistant and Apple’s Siri.

with SpolinBot?

Head over here to meet the AI for yourself. If you’d like to contribute to the research project, be sure to submit your conversation when complete.

In most cases, chatbots are fairly limited in their interactions. But with May’s and Cho’s SPOLIN project, a chatbot is programmed with improvisational dialogue to help facilitate more human-like conversations. These types of chatbots may be useful in talk therapy, training for conversational behavior (such as teaching kids how to text), brainstorming dialogue for characters in novels, and acting as dialogue partners in improv scenes. As more improvements around decision-making are made, improv-based chatbots could potentially work as personal assistants or team partners, kind of like J.A.R.V.I.S. from “Iron Man.”

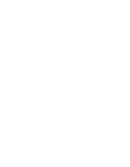

The SPOLIN research collection comprises more than 68,000 conversational examples of a prompt and a response. Specifically, they’re examples of “yes, and” dialogue, a foundational technique used by improv comedians such as Amy Poehler and Tina Fey to engage with others and keep the conversation flowing.

For example, if Poehler said, “We’re in the Amazon jungle,” Fey might respond, “Yes, and I’ve just befriended this anaconda.”

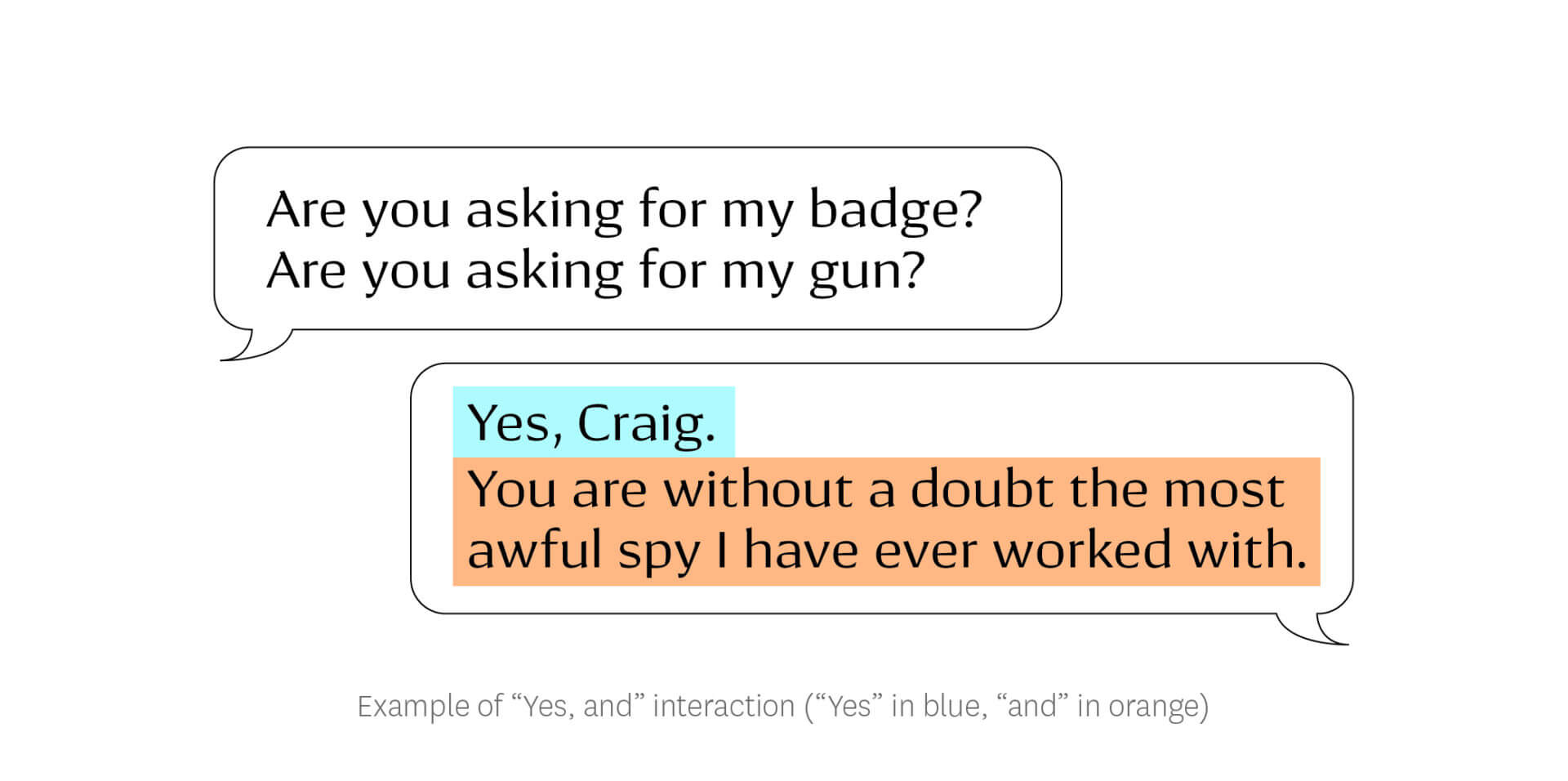

After gathering the data, Cho and May built SpolinBot, an improv chatbot ready to “yes, and” with the best human improvisers out there.

Finding common ground

May’s love of language analysis had led him to work on natural language processing (NLP) projects, and he began searching for more interesting forms of data he could work with.

“I’d done some improv in college and pined for those days,” said May, a research assistant professor of computer science. “Then a friend who was in my college improv troupe suggested that it would be handy to have a ‘yes, and’ bot to practice with, and that gave me the inspiration: It wouldn’t just be fun to make a bot that can improvise, it would be practical!”

“Yes, and” is a pillar of improvisation that prompts a participant to accept the reality that another participant says (“yes”) and then build on that reality by providing additional information (“and”). This technique is key in establishing a common ground in interaction. As May put it, “‘Yes, and’ is the improv community’s way of saying ‘grounding.’”

These improv rules are important because they help participants build a reality together. In movie scripts, for example, maybe 10% of the lines could be considered “yes, ands,” whereas in improv at least 25% of the lines are “yes, ands.” This is because, unlike movies, which have settings and characters that are already established for audiences, improvisers act without scene, props or any objective reality.

“Because improv scenes are built from almost no established reality, dialogue taking place in improv actively tries to reach mutual assumptions and understanding,” said Cho, a USC Viterbi Ph.D. student. “This makes dialogue in improv more interesting than most ordinary dialogue, which usually takes place with many assumptions already in place, from common sense, visual signals, etc.”

Initially, May and Cho examined typical dialogue sets such as movie scripts and subtitle collections, but those sources didn’t contain enough “yes, ands” to mine. Moreover, it can be difficult to find recorded, let alone transcribed, improv.

The friendly neighborhood improv bot

Before visiting USC as an exchange student in fall 2018 from Hong Kong University of Science and Technology (HKUST), Cho reached out to May, inquiring about NLP research projects. Once Cho came to USC, he learned about the improv project that May had in mind.

“I was interested in how it touched on a niche that I wasn’t familiar with, and I was especially intrigued that there was little to no prior work in this area,” Cho said. “I was hooked when Jon said that our project will be answering a question that hasn’t even been asked yet: the question of how modeling grounding in improv through the ‘yes, and’ act can contribute to improving dialogue systems.”

Cho investigated multiple approaches to gathering improv data.

He knocked on doors of Hollywood improv clubs, but they didn’t respond to his requests. He tried recording live improv performances in USC undergraduate acting classes, but the recording quality was poor. After watching and listening to TV shows and podcasts, he finally came across Spontaneanation, an improv podcast hosted by prolific actor and comedian Paul F. Tompkins that ran from 2015 to 2019.

With its open-topic episodes, about 30 minutes of continuous improvisation, high-quality recordings and substantial size, Spontaneanation was the perfect source to mine “yes, ands” for the project. The duo fed their Spontaneanation data into a program, and SpolinBot was born.

“One of the cool parts of the project is that we figured out a way to just use improv,” May explained. “Spontaneanation was a great resource for us but is fairly small as data sets go — we only got about 10,000 ‘yes, ands’ from it. But we used those ‘yes, ands’ to build a classifier [program] that can look at new lines of dialogue and determine whether they’re ‘yes, ands.’”

Working with improv dialogue helped the researchers find “yes, ands” from other sources as well, as most of the SPOLIN data comes from movie scripts and subtitles.

“Ultimately, the SPOLIN corpus contains more than five times as many ‘yes, ands’ from non-improv sources than from improv, but we only were able to get those ‘yes, ands’ by starting with improv,” May said.

SpolinBot has a few controls that can refine its responses, taking them from safe and boring to funny and wacky, and also generates five response options that users can choose from to continue the conversation.

SpolinBot #goals

The duo has a lot of plans for SpolinBot, along with extending its conversational abilities beyond “yes, and.”

“We want to explore other factors that make improv interesting, such as character-building, scene-building, ‘if this usually interesting anomaly is true, what else is also true?’ and call-backs,” said Cho, referring to objects or events mentioned in previous dialogue turns. “We have a long way to go, and that makes me more excited for what I can explore throughout my Ph.D. and beyond.”

“Ultimately, we want to build a good conversational partner and a good creative partner,” May added, noting that even in improv, “yes, and” only marks the beginning of a conversation. “Today’s bots, SpolinBot included, aren’t great at keeping the thread of the conversation going. There should be a sense that both participants aren’t just establishing a reality, but are also experiencing that reality together.”

That latter point is key, because, as May explained, a good partner should be an equal, not subservient like Alexa and Siri. “I’d like my partner to be making decisions and brainstorming along with me,” he said. “We should ultimately be able to reap the benefits of teamwork and cooperation that humans have long benefited from by working together. And the virtual partner has the added benefit of being much better and faster at math than me, and not actually needing to eat!”