An Act of Violence, or Just a Mannequin Challenge?

When confronting violence around the world, law enforcement and ordinary citizens alike are encouraged to be alert, especially in crowds and public spaces. Now, a USC Viterbi computer scientist is working to enhance the ability of computers to see potentially abnormal activity — and perhaps thwart an attack.

Tal Hassner of USC Viterbi’s Information Sciences Institute (ISI), whose expertise is in computer vision — an interdisciplinary field that deals with how computers can be made to gain high-level understanding from digital images or videos — has been developing a method to teach computers to identify the content of live video for a variety of purposes, including the detection of violence in crowds. Indeed, the algorithm he and his team have developed enables a computer to review hundreds of surveillance videos simultaneously, spot violence with 82 percent accuracy and alert a human to a violent episode in about four seconds — a time frame that Hassner believes might even be faster than human response.

“Violence detection by computers is not new,” said Hassner, who studies statistical pattern recognition and computer graphics. Cities such as Los Angeles and London are already wired with hundreds to thousands of closed circuit TVs that feed into a centralized monitor. But these CCTV systems “are being used for historical information,” he explained. One well-documented example is the identification of the perpetrators of the 2013 Boston Marathon bombing. The suspects were identified from footage taken by security cameras.

Hassner believes CCTV systems shouldn’t be limited to “post-mortem” review. He envisions using computers to sort through the enormous trove of video captured by municipalities to see violent acts as they happen and alert law enforcement instantly.

“Cities already have CCTVs going to a central station,” Hassner said. “To watch 5,000 cameras all day and all night, you would need to an employ an army of individuals watching CCTVs versus what a computer can process.”

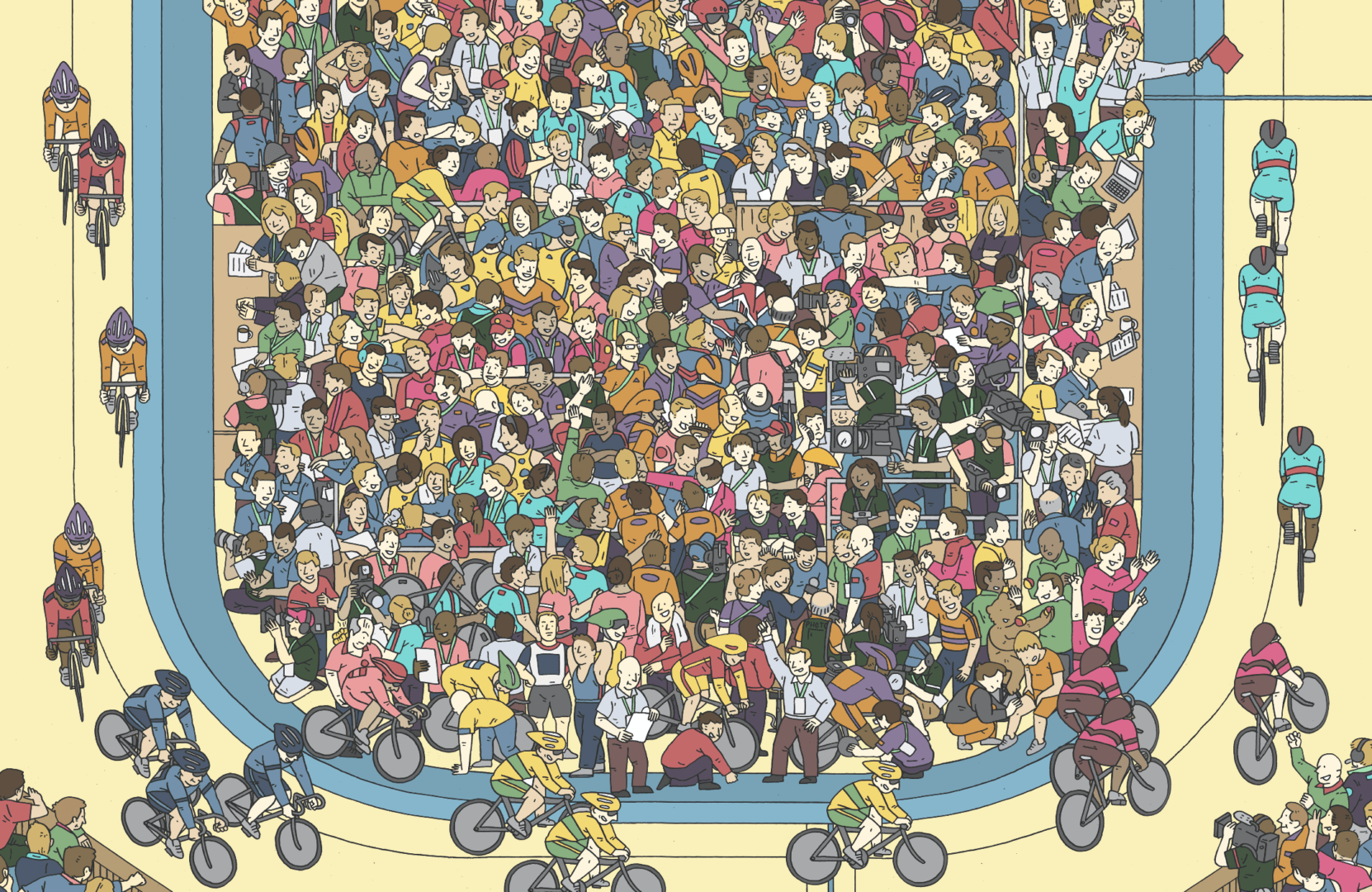

But using computer vision to interpret video and identify crowd violence is not easy. Crowds are tricky. There are obstructions — individuals cover and block one another, so it is hard to determine what is happening at any given second. It may not be clear whether the activity is malevolent or innocuous, for example, people doing “the wave” in a stadium or even a “mannequin challenge,” in which people stop suddenly in a pose that is then filmed and posted online. In addition, the quality of the video may be poor.

Teaching a computer to identify violence is different than teaching a human to identify a pattern. People rely on certain images or objects to provide cues for context. For example, an umbrella tells us it could be raining; a gun tells us there could be danger.

“We as humans often think that our way of thinking is the best for describing something to a computer,” Hassner said. Yet with computers, he explained, “we don’t need to use our own language.” In other words, we don’t need to tell a computer to look for a gun, a fistfight or any of the telltale clues people would look for to assess if a situation is in fact violent. Previous researchers have trained computers to see high-level “semantic” features, including a gun, a knife or an explosion in a frame. But capturing these images often requires clear, high-resolution video and more time to process.

Hassner suggests that that sort of high-level information is not necessary, and in fact, identifying the specifics in a situation “makes the solution harder than the problem.”

“For a computer to determine if something is violent or nonviolent is a lot easier than figuring out if a particular individual is holding a gun,” he said.

Hassner is taking a different approach. He and a team of researchers fed nearly 250 “in the wild” YouTube videos to a computer. The videos were authentic snippets of people in real life, including crowd situations. Some of the videos showed violence erupting; others were benign. The goal, he said, is to teach computers “to understand how crowds move” and recognize “good” behavior versus bad.

In the process, Hassner and his team taught the computer to detect what he describes as changes to “low-level features” in the video, such as changes in pixelation, color or brightness.

“A single pixel changing its value is not meaningful,” he said. Rather, they have trained the computer to look over millions of pixels over millions of frames and compute “non-trivial” statistics.

“We looked at the locomotion — what moved where at a very local level,” Hassner said. Using that data, the researchers created an algorithm to measure what they call “violent flows.”

Can such limited information — not knowing what type of violence — be meaningful? Many academics and policymakers suggest that surveillance does not deter crime. However, proponents of surveillance believe that having information to confirm that unusual or violent acts are taking place could improve police response time. And law enforcement would have some verification that a crime is actually occurring and avoid a false alarm.

Hassner thinks timely notification could be the difference between “life and death.”

Hassner admits they are not “100 percent there yet.” But the technology could certainly find an application in an athletic stadium or concert venue. The next step, he said, is to train a computer to identify individual behaviors.