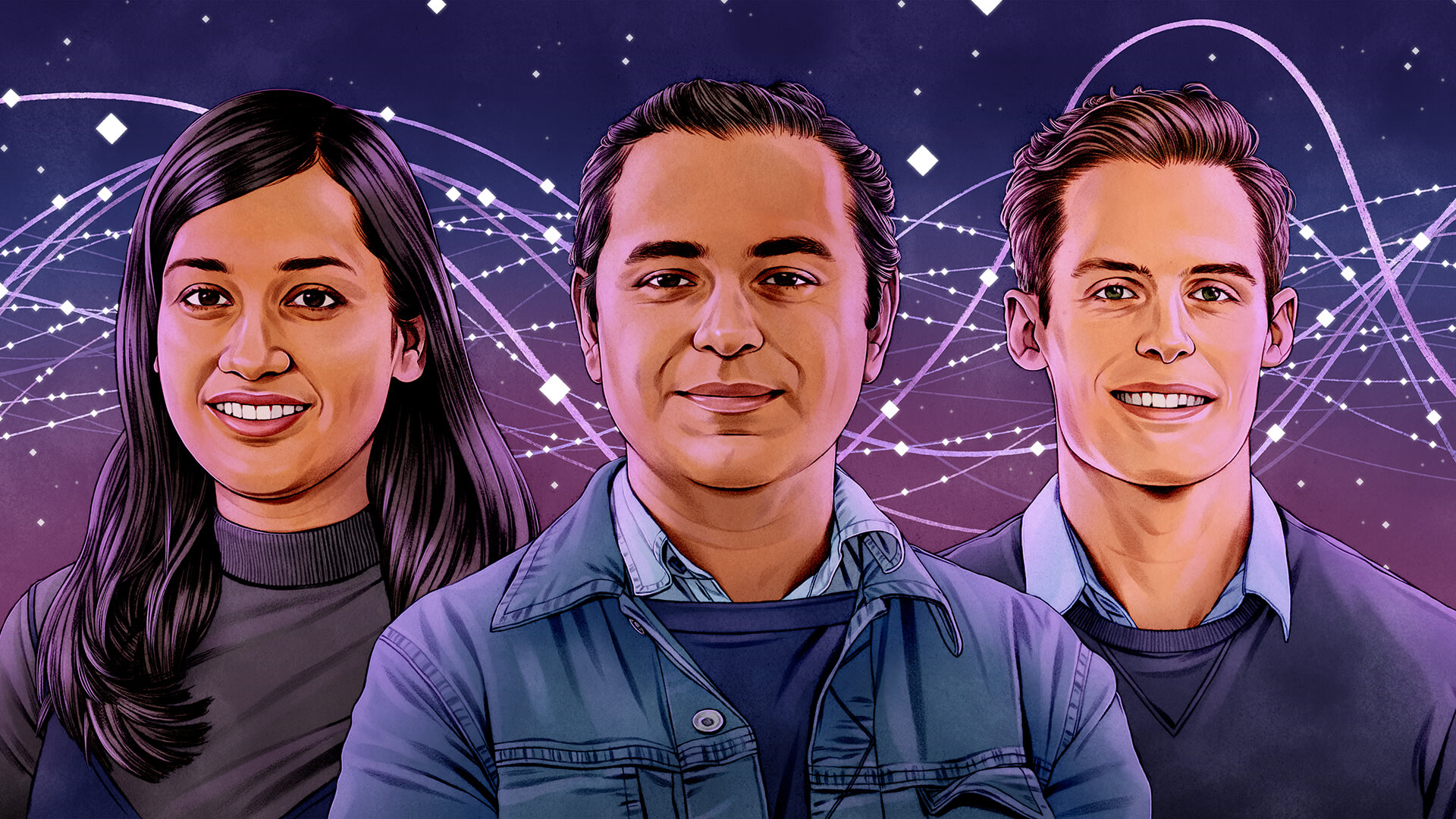

USC Alumni Paved Path for ChatGPT

ChatGPT has taken the world by storm, but seeds of the groundbreaking technology were sown at USC Viterbi. The seminal paper “Attention Is All You Need,” which laid the foundation for ChatGPT and other generative AI systems, was co-authored by Ashish Vaswani, Ph.D. CS ’14 and Niki Parmar, M.S. CS ’15.

The landmark paper was presented at the 2017 Conference on Neural Information Processing Systems (NeurIPS), one of the top conferences in AI and machine learning. In the paper, the researchers introduced the transformer architecture, a powerful type of neural network that has become widely used for natural language processing tasks, from text classification to language modeling.

Attention is all you need

Transformer models apply mathematical techniques called “attention” that allow them to selectively focus on different words and phrases of the input text, and to generate more coherent, contextually relevant responses. By understanding the relationships between words, the model can better capture the underlying meaning and context of the input text. ChatGPT uses a variant of the transformer called the GPT (or generative pre-trained transformer).

The transformer architecture is considered a paradigm shift in artificial intelligence and natural language processing, making recurrent neural networks (RNNs), the once-dominant architecture in language processing models, largely obsolete.

It is considered a crucial element of ChatGPT’s success, alongside other innovations in deep learning and open-source distributed training.

“The important components in this paper were doing parallel computation across all the words in the sentence and the ability to learn and capture the relationships between any two words in the sentence,” said Parmar, “not just neighboring words as in long short-term memory networks and convolutional neural network-based models.”

A universal model

Vaswani refers to ChatGPT as “a clear landmark in the arc of AI,” but it wasn’t necessarily his goal when he started working on the transformer model in 2016.

“For me, personally, I was seeking a universal model. A single model that would consolidate all modalities and exchange information between them, just like the human brain.”

Since its publication, “Attention Is All You Need” has received more than 60,000 citations, according to Google Scholar. Its total citation count continues to increase as researchers build on its insights and apply transformer architecture techniques to new problems, from image and music generation to predicting protein properties for medicine.

It also set the stage for a third USC Viterbi engineer to take the torch and catapult this foundational research to the product we know today as ChatGPT. In September 2022, Barret Zoph, B.S. CS ’16, joined OpenAI as tech lead, playing a fundamental role in developing the language model.

“It’s especially interesting to think back to when I first got started on research in 2016. The amount of progress from that time to where it is now is almost unfathomable,” Zoph said.

“I think exponential growth is quite a hard thing to grasp. But I think [models like ChatGPT] are going to continue to get better, to help people and to improve their day-to-day lives,” he added.

In keeping with this legacy, on March 9, USC President Carol L. Folt announced that the university is launching the Center for Generative AI and Society with $10 million for research that draws together leading experts from USC Viterbi and four other schools: USC Annenberg School for Communication and Journalism, USC School of Cinematic Arts, USC Iovine and Young Academy and USC Rossier School of Education.